Diffusion models in SO3 space

One typical way to represent the structure of proteins is to use the position of alpha carbon and the rotation matrix of each residue. So the diffusion process on the space of alpha carbon is simple Euclidean space, but the diffusion process on the rotation matrix ( space) is non-Euclidean.

In this notebook, I will show the general formulation of diffusion models in space.

Score function and noise adding process

Just like the diffusion process in euclidean space, the core part of the diffusion process includes the score function and noise adding process. So to understand the diffusion process in space, I will first show the noise adding process (or random walk) in the 3D space.

Random walk in 3D space

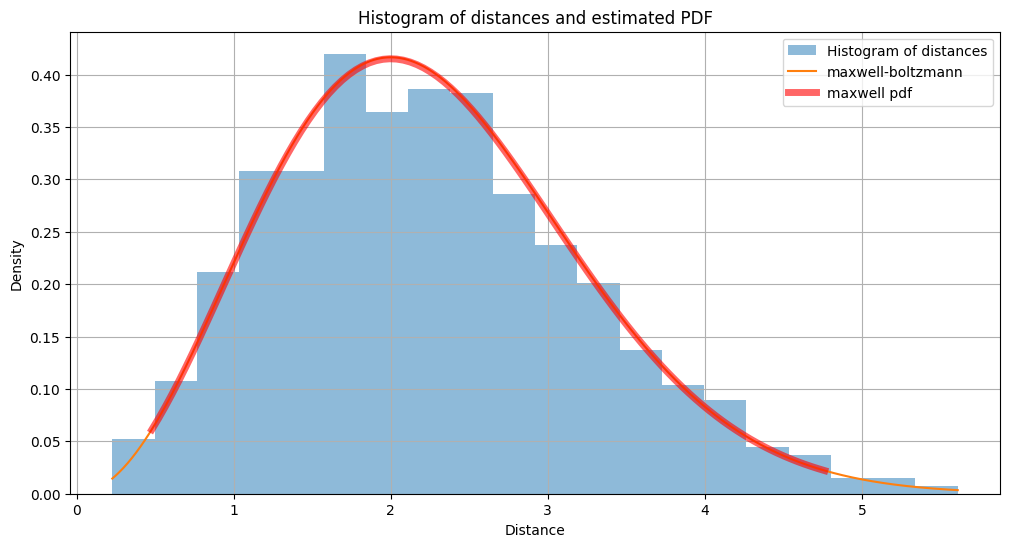

We generate stepsize from a gaussian distribution with std= 0.1 in x,y,z direction, after 200 steps, we found that the distance from origin follows from the Maxwell-Boltzmann distribution with std =

Random walk in SO3 space

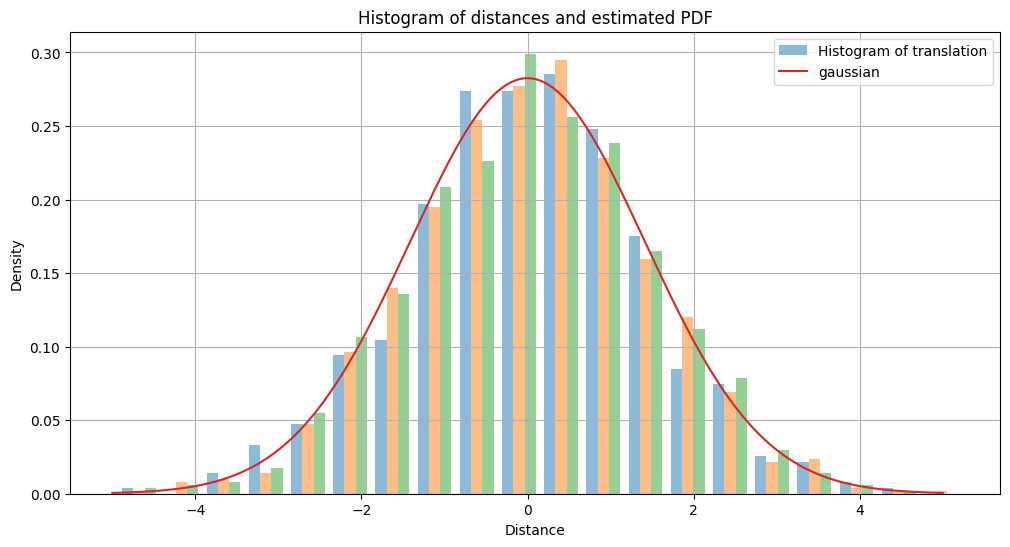

Likewise in the 3D cases, we can do the random walk in space by sampling angles from gaussian distributions and compose them with Lie algebra bases. To write it formally,

Progress:: 100%|██████████████████████████████████████████████████| 100/100 [00:13<00:00, 7.23it/s]std tensor([0.2000, 0.2828, 0.3464, 0.4000, 0.4472, 0.4899, 0.5292, 0.5657, 0.6000,

0.6325, 0.6633, 0.6928, 0.7211, 0.7483, 0.7746, 0.8000, 0.8246, 0.8485,

0.8718, 0.8944, 0.9165, 0.9381, 0.9592, 0.9798, 1.0000, 1.0198, 1.0392,

1.0583, 1.0770, 1.0954, 1.1136, 1.1314, 1.1489, 1.1662, 1.1832, 1.2000,

1.2166, 1.2329, 1.2490, 1.2649, 1.2806, 1.2961, 1.3115, 1.3267, 1.3416,

1.3565, 1.3711, 1.3856, 1.4000, 1.4142, 1.4283, 1.4422, 1.4560, 1.4697,

1.4832, 1.4967, 1.5100, 1.5232, 1.5362, 1.5492, 1.5620, 1.5748, 1.5875,

1.6000, 1.6125, 1.6248, 1.6371, 1.6492, 1.6613, 1.6733, 1.6852, 1.6971,

1.7088, 1.7205, 1.7321, 1.7436, 1.7550, 1.7664, 1.7776, 1.7889, 1.8000,

1.8111, 1.8221, 1.8330, 1.8439, 1.8547, 1.8655, 1.8762, 1.8868, 1.8974,

1.9079, 1.9183, 1.9287, 1.9391, 1.9494, 1.9596, 1.9698, 1.9799, 1.9900,

2.0000])

angle list shape (100, 5000)

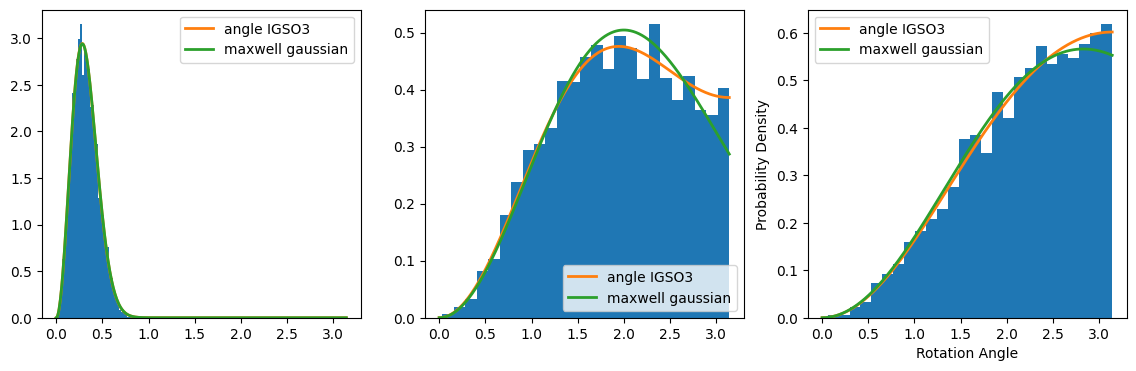

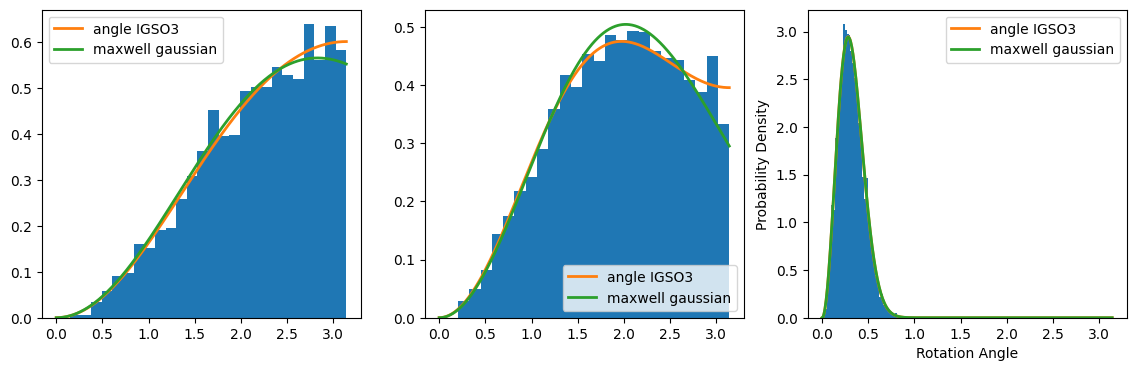

We found out that the angle distribution of this noise adding process follows the distribution called IGSO3 distribution, which writes:

Denoising dynamics in general and in SO3 space

The denoising dynamics in general can be formulated into a reverse SDE corresponding to a forward SDE in continuous form. A remarkable result from Anderson states that, the reverse SDE equation for a forward SDE process: can be modeled as: Note that this formulation is in Euclidean space, in case, we have to use the formulation in Lie algebra space, then exponentiate back to space.

To write it more explicitly, we can write the random walk in Lie algebra space as: where is sampled from three gaussian distribution with standard deviation , is the Three Lie algebra matrix.

Then the reverse process of this SDE in Lie algebra space is: Exponentiate back to the matrix, we have the reverse process in space: where is the probability density of matrix of the marginal distribution in the forward process. Now it is only a matter of calculating the expression , which is also called the score function.

Score function in SO3 space

The score function in SO3 space can be written using the chain rule:

Write the gradient of the rotation angle in compact form: Write in a compact form, using the identity

/tmp/ipykernel_69/3614484125.py:2: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

std_all = torch.tensor(std_all)

std all tensor([0.2000, 0.2828, 0.3464, 0.4000, 0.4472, 0.4899, 0.5292, 0.5657, 0.6000,

0.6325, 0.6633, 0.6928, 0.7211, 0.7483, 0.7746, 0.8000, 0.8246, 0.8485,

0.8718, 0.8944, 0.9165, 0.9381, 0.9592, 0.9798, 1.0000, 1.0198, 1.0392,

1.0583, 1.0770, 1.0954, 1.1136, 1.1314, 1.1489, 1.1662, 1.1832, 1.2000,

1.2166, 1.2329, 1.2490, 1.2649, 1.2806, 1.2961, 1.3115, 1.3267, 1.3416,

1.3565, 1.3711, 1.3856, 1.4000, 1.4142, 1.4283, 1.4422, 1.4560, 1.4697,

1.4832, 1.4967, 1.5100, 1.5232, 1.5362, 1.5492, 1.5620, 1.5748, 1.5875,

1.6000, 1.6125, 1.6248, 1.6371, 1.6492, 1.6613, 1.6733, 1.6852, 1.6971,

1.7088, 1.7205, 1.7321, 1.7436, 1.7550, 1.7664, 1.7776, 1.7889, 1.8000,

1.8111, 1.8221, 1.8330, 1.8439, 1.8547, 1.8655, 1.8762, 1.8868, 1.8974,

1.9079, 1.9183, 1.9287, 1.9391, 1.9494, 1.9596, 1.9698, 1.9799, 1.9900,

2.0000])

rot_mats shape (5000, 3, 3)

initial rot mats shape torch.Size([5000, 3, 3])

Progress:: 100%|██████████████████████████████████████████████████| 100/100 [00:32<00:00, 3.11it/s]angle back list shape (100, 5000)

score_list shape (0,)

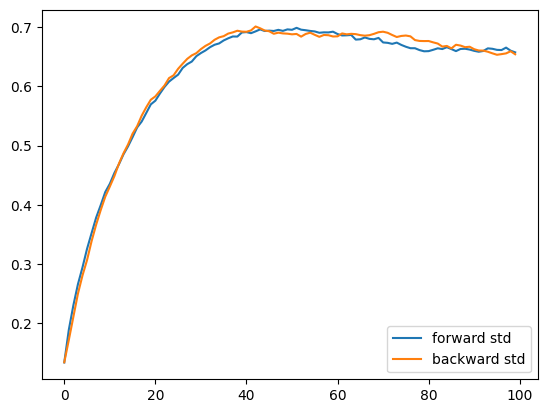

Mean and std of the forward and backward process

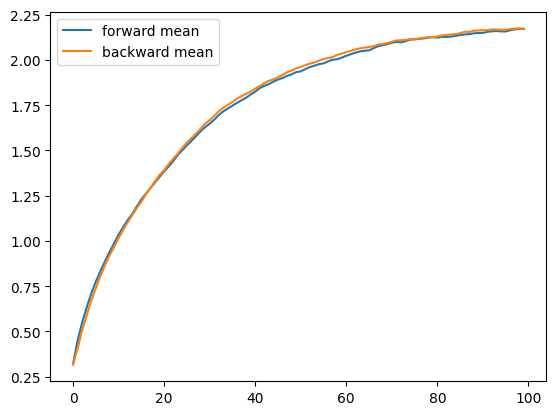

We can plot the mean and std of the forward and backward process, and find them to be consistent.

We can also plot the rotation angle distribution along the denoising process.

Linfeng Zhang